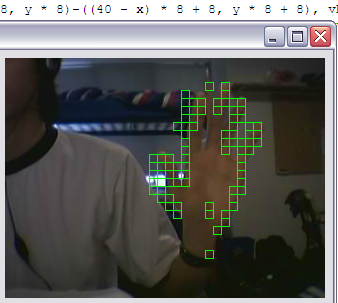

Here's a snapshot of the things I played to investigate motion detection. I was sort of hoping to find some invariance in shapes or my hand, but alas, not a single one that is reliable enough is found. I hope to be able to control things on my screen by just waving my hand infront of the webcam. I've several ideas, each one is different, depending on how I want to design the interaction. If I just want a really simple back/forward instruction, I can look for blobs at the bottom and on the sides of screen. First I find difference of two successive frames, filter off the noise or lone pixel changes (I want to look for big changes), then find biggest Connected Component either by means of DFS or BFS, and find the major/minor axis using very simple approximation, and probably get the centroid as well. So we've a matrix of biggest motion here, along with the mean positions. Then within the interval 0.6 plus minus 0.2 seconds, I'll look for another calculated mean position that differ from the previous one by a fixed percentage, and still another mean position that's roughly the same as the first one. This approximates to a hand lifting from the bottom (the first motion matrix), to the side (the second motion matrix), and then to bottom again (the third motion matrix). Of course, to avoid other motions, such as body/head and people walking by, we've to make some assumptions here. The user will not move too much. The hand movement is usually from the bottom then to the side and then to the bottom again (if we only look for such patterns, it should reduce lots of false positives), it will generate the largest motion, and set a threshold for pixel change between frames for that biggest connected component.

There's also another idea, which basically try to identify which pixels amongst all are foreground/background. This will ensure only objects close enough to the webcam will be able to affect the on screen objects. This has the added benefit of not constraining the hand shape/movement, in addition to using other objects to control on screen objects, simply by waving it near to the webcam. This is hard, however, because we're approximating objects' distance from a single monocular frame, and within such a short interval, about 0 to roughly 1 feet.

Another one is to use Vonoroi diagrams. However, differing lighting conditions, reflections, different skin color, user possibly wearing gloves/long sleeves, and the proximity of the hand's skin color to the head's and body's mean this method is inaccurate, if it works at all.

Another method is to detect edges, then connect regions with small color difference. Of all the regions, look for one that closely approximates the shape of a hand. The processing needed is quite a lot, and this impose a shape restriction to the hand, which after some time, the hand will not be very comfortable.